Us. And them.

Robots are being created that can think, act, and relate to humans. Are we ready?

Someone types a command into a laptop, and Actroid-DER jerks upright with a shudder and a wheeze. Compressed air flows beneath silicone skin, triggering actuators that raise her arms and lift the corners of her mouth into a demure smile. She seems to compose herself, her eyes panning the room where she stands fixed to a platform, tubes and wires running down through her ankles. She blinks, then turns her face toward me. I can't help but meet her—its—mechanical gaze. "Are you surprised that I'm a robot?" she asks. "I look just like a human, don't I?"

Her scripted observation has the unfortunate effect of calling my attention to the many ways she does not. Developed in Japan by the Kokoro Company, the Actroid-DER android can be rented to serve as a futuristic spokesmodel at corporate events, a role that admittedly does not require great depth of character. But in spite of the $250,000 spent on her development, she moves with a twitchy gracelessness, and the inelasticity of her features lends a slightly demented undertone to her lovely face. Then there is her habit of appearing to nod off momentarily between utterances, as if she were on something stronger than electricity.

While more advanced models of the Actroid make the rounds of technology exhibitions, this one has been shipped to Carnegie Mellon University in Pittsburgh to acquire the semblance of a personality. Such at least is the hope of five optimistic graduate students in the university's Entertainment Technology Center, who have been given one 15-week semester to render the fembot palpably more fem and less bot. They have begun by renaming her Yume—dream, in Japanese.

"Kokoro developed her to be physically realistic, but that's not enough by itself," says Christine Barnes, student co-producer of the Yume Project. "What we're going to do is shift the focus from realism to believability."

The Actroid androids are part of a new generation of robots, artificial beings designed to function not as programmed industrial machines but as increasingly autonomous agents capable of taking on roles in our homes, schools, and offices previously carried out only by humans. The foot soldiers of this vanguard are the Roomba vacuums that scuttle about cleaning our carpets and the cuddly electronic pets that sit up and roll over on command but never make a mess on the rug. More sophisticated bots may soon be available that cook for us, fold the laundry, even babysit our children or tend to our elderly parents, while we watch and assist from a computer miles away.

"In five or ten years robots will routinely be functioning in human environments," says Reid Simmons, a professor of robotics at Carnegie Mellon.

Such a prospect leads to a cascade of questions. How much everyday human function do we want to outsource to machines? What should they look like? Do we want androids like Yume puttering about in our kitchens, or would a mechanical arm tethered to the backsplash do the job better, without creeping us out? How will the robot revolution change the way we relate to each other? A cuddly robotic baby seal developed in Japan to amuse seniors in eldercare centers has drawn charges that it could cut them off from other people. Similar fears have been voiced about future babysitting robots. And of course there are the halting attempts to create ever willing romantic androids. Last year a New Jersey company introduced a talking, touch-sensitive robot "companion," raising the possibility of another kind of human disconnect.

In short: Are we ready for them? Are they ready for us?

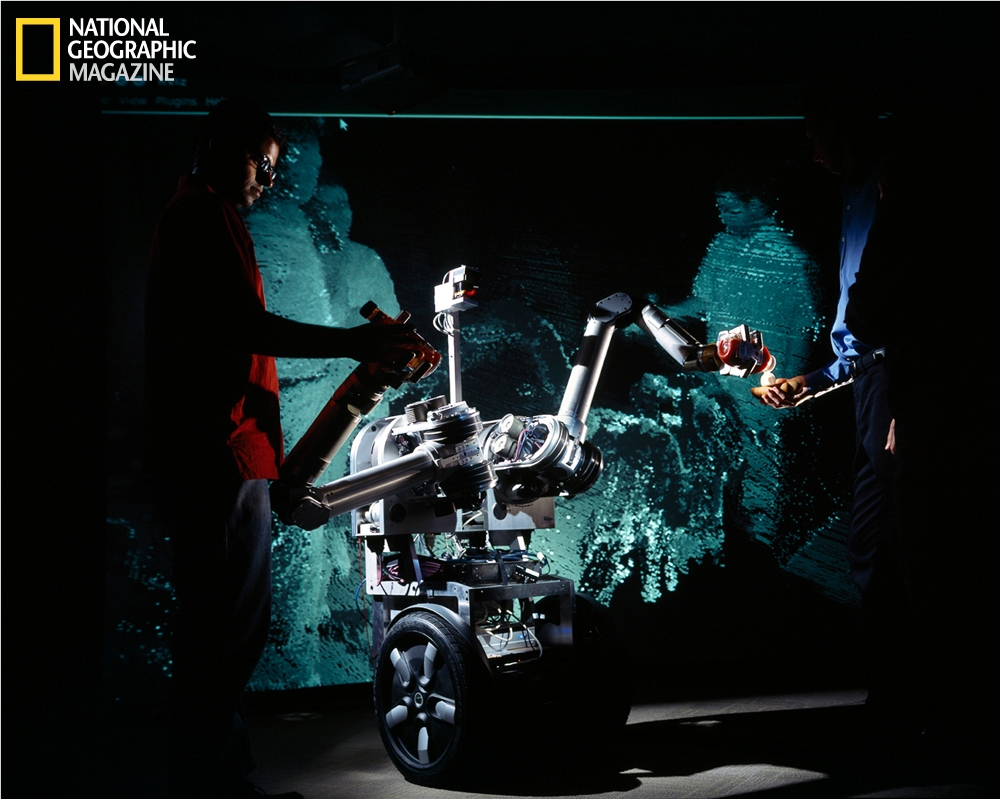

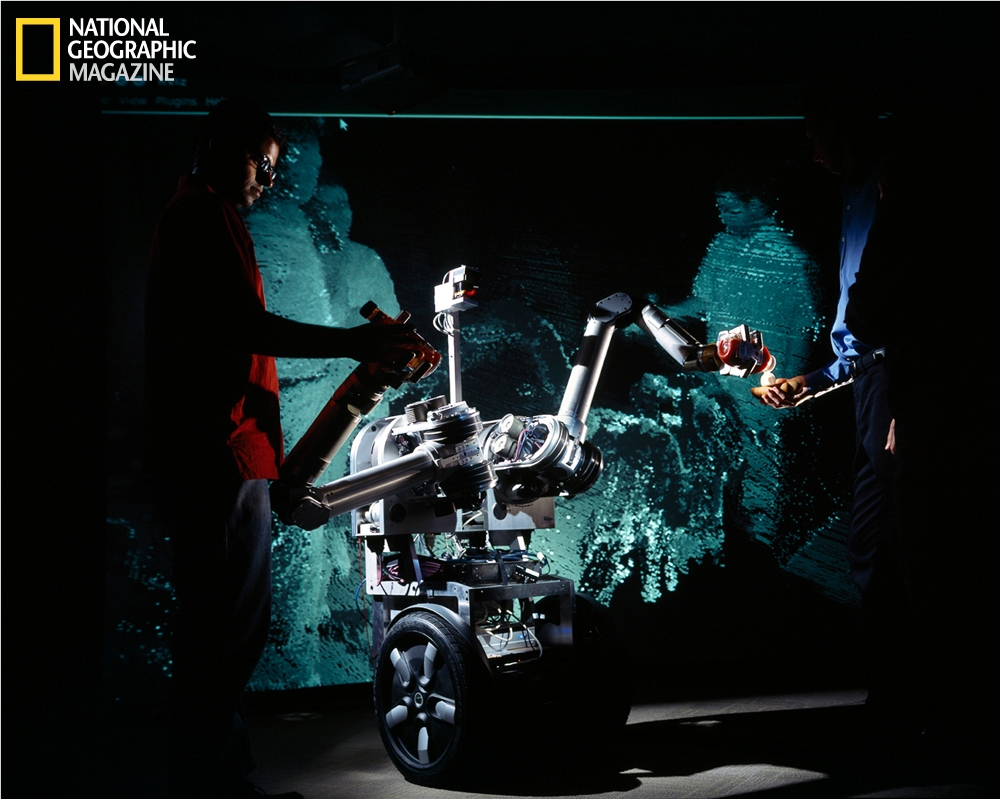

In a building a mile up the hill from the Entertainment Technology Center, HERB sits motionless, lost in thought. Short for Home Exploring Robotic Butler, HERB is being developed by Carnegie Mellon in collaboration with Intel Labs Pittsburgh as a prototype service bot that might care for the elderly and disabled in the not too distant future. HERB is a homely contraption, with Segway wheels for legs and a hodgepodge of computers for a body. But unlike pretty Yume, HERB has something akin to a mental life. Right now the robot is improving its functionality by running through alternative scenarios to manipulate representations of objects stored in its memory, tens of thousands of scenarios a second.

"I call it dreaming," says Siddhartha Srinivasa, HERB's builder and a professor at the Robotics Institute at Carnegie Mellon. "It helps people intuitively understand that the robot is actually visualizing itself doing something."

Traditional robots, the kind you might find spot-welding a car frame, can be programmed to carry out a very precise sequence of tasks but only within rigidly structured environments. To negotiate human spaces, robots like HERB need to perceive and cope with unfamiliar objects and move about without bumping into people who are themselves in motion. HERB's perception system consists of a video camera and a laser navigation device mounted on a boom above his mechanical arm. ("We tend to think of HERB as a he," Srinivasa says. "Maybe because most butlers are. And he's kind of beefy.") In contrast to a hydraulic industrial robotic armature, HERB's arm is animated by a pressure-sensing system of cables akin to human tendons: a necessity if one wants a robot capable of supporting an elderly widow on her way to the bathroom without catapulting her through the door.

In the lab one of Srinivasa's students taps a button, issuing a command to pick up a juice box sitting on a nearby table. HERB's laser spins, creating a 3-D grid mapping the location of nearby people and objects, and the camera locks on a likely candidate for the target juice box. The robot slowly reaches over and takes hold of the box, keeping it upright. On command, he gently puts it down. To the uninitiated, the accomplishment might seem underwhelming. "When I showed it to my mom," Srinivasa says, "she couldn't understand why HERB has to think so hard to pick up a cup."

The problem is not with HERB but with the precedents that have been set for him. Picking up a drink is dead simple for people, whose brains have evolved over millions of years to coordinate exactly such tasks. It's also a snap for an industrial robot programmed for that specific action. The difference between a social robot like HERB and a conventional factory bot is that he knows that the object is a juice box and not a teacup or a glass of milk, which he would have to handle differently. How he understands this involves a great deal of mathematics and computer science, but it boils down to "taking in information and processing it intelligently in the context of everything he already knows about what his world looks like," Srinivasa explains.

When HERB is introduced to a new object, previously learned rules inform the movement of his pressure-sensitive arm and hand. Does the object have a handle? Can it break or spill? Srinivasa programmed HERB's grips by studying how humans behave. In a bar, for instance, he watched bartenders use a counterintuitive underhanded maneuver to grab and pour from a bottle. He reduced the motion to an algorithm, and now HERB has it in his repertoire.

Of course the world HERB is beginning to master is a controlled laboratory environment. Programming him to function in real human spaces will be frightfully more challenging. HERB has a digital bicycle horn that he honks to let people know he's getting near them; if a room is busy and crowded, he takes the safest course of action and simply stands there, honking at everybody.

This strategy works in the lab but would not go over well in an office. Humans can draw on a vast unconscious vocabulary of movements—we know how to politely move around someone in our path, how to sense when we're invading someone's personal space. Studies at Carnegie Mellon and elsewhere have shown that people expect social robots to follow the same rules. We get uncomfortable when they don't or when they make stupid mistakes. Snackbot, another mobile robot under development at Carnegie Mellon, takes orders and delivers snacks to people at the School of Computer Science. Sometimes it annoyingly brings the wrong snack or gives the wrong change. People are more forgiving if the robot warns them first that it might make errors or apologizes when it screws up.

Then there are the vagaries of human nature to cope with. "Sometimes people steal snacks from the robot," says one of Snackbot's developers. "We got it on video."

Like many social robots, Snackbot is a cute fellow—four and a half feet tall, with a head and cartoonish features that suggest, barely, a human being. In addition to lowering expectations, this avoids any trespass into the so-called uncanny valley, a term invented by pioneering Japanese roboticist Masahiro Mori more than 40 years ago. Up to a point, we respond positively to robots with a human appearance and motion, Mori observed, but when they get too close to lifelike without attaining it, what was endearing becomes repellent, fast.

Although most roboticists see no reason to tiptoe near that precipice, a few view the uncanny valley as terrain that needs to be crossed if we're ever going to get to the other side—a vision of robots that look, move, and act enough like us to inspire empathy again instead of disgust. Arguably the most intrepid of these explorers is Hiroshi Ishiguro, the driving force behind the uncanny valley girl Yume, aka Actroid-DER. Ishiguro has overseen the development of a host of innovative robots, some more disturbing than others, to explore this charged component of human-robot interaction (HRI). In just this past year he's been instrumental in creating a stunningly realistic replica of a Danish university professor called Geminoid DK, with goatee, stubble, and a winning smile, and a "telepresence" cell phone bot called Elfoid, about the size, shape, and quasi cuddliness of a human preemie. Once it's perfected, you'll be able to chat with a friend using her own Elfoid, and her doll phone's appendages will mimic your movements.

Ishiguro's most notorious creation so far is an earlier Geminoid model that is his own robotic twin. When I visit him in his lab at ATR Intelligent Robotics and Communication Laboratories in Kyoto, Japan, the two of them are dressed head to toe in black, the bot sitting in a chair behind Ishiguro, wearing an identical mane of black hair and thoughtful scowl. Ishiguro, who also teaches at Osaka University two hours away, says he created the silicone doppelgänger so he could literally be in both places at once, controlling the robot through motion-capture sensors on his face so he/it can interact through the Internet with colleagues at ATR, while the mere he stays in Osaka to teach. Like other pioneers of HRI, Ishiguro is interested in pushing not just technological envelopes but philosophical ones as well. His androids are cognitive trial balloons, imperfect mirrors designed to reveal what is fundamentally human by creating ever more accurate approximations, observing how we react to them, and exploiting that response to fashion something even more convincing.

"You believe I'm real, and you believe that thing is not human," he says, gesturing back at his twin. "But this distinction will become more difficult as the technology advances. If you finally can't tell the difference, does it really matter if you're interacting with a human or machine?" An ideal use for his twin, he says, would be to put it at the faraway home of his mother, whom he rarely visits, so she could be with him more.

"Why would your mother accept a robot?" I ask.

Two faces scowl back at me. "Because it is myself," says one.

Before robotic versions of sons can interact with mothers the way real sons do, much more will be required than flawless mimicry. Witness the challenges HERB faces in navigating through simple human physical environments. Other robots are making tentative forays into the treacherous terrain of human mental states and emotions. Nilanjan Sarkar of Vanderbilt University and his former colleague Wendy Stone, now of the University of Washington, developed a prototype robotic system that plays a simple ball game with autistic children. The robot monitors a child's emotions by measuring minute changes in heartbeat, sweating, gaze, and other physiological signs, and when it senses boredom or aggravation, it changes the game until the signals indicate the child is having fun again. The system is not sophisticated enough yet for the complex linguistic and physical interplay of actual therapy. But it represents a first step toward replicating one of the benchmarks of humanity: knowing that others have thoughts and feelings, and adjusting your behavior in response to them.

In a 2007 paper provocatively entitled "What Is a Human?" developmental psychologist Peter Kahn of the University of Washington, together with Ishiguro and other colleagues, proposed a set of nine other psychological benchmarks to measure success in designing humanlike robots. Their emphasis was not on the technical capabilities of robots but on how they're perceived and treated by humans.

Consider the benchmark "intrinsic moral value"—whether we deem a robot worthy of the basic moral considerations we naturally grant other people. Kahn had children and adolescents play guessing games with a cute little humanoid named Robovie. After a few rounds an experimenter would abruptly interrupt just as it was Robovie's turn to guess, telling the robot the time had come to be put away in a closet. Robovie would protest, declaring it unfair that he wasn't being allowed to take his turn.

"You're just a robot. It doesn't matter," the experimenter answered. Robovie continued to protest forlornly as he was rolled away. Of course it wasn't the robot's reaction that was of interest—it was being operated by another researcher—but the human subjects' response.

"More than half the people we tested said they agreed with Robovie that it was unfair to put him in the closet, which is a moral response," says Kahn.

That humans, especially children, might empathize with an unjustly treated robot is perhaps not surprising—after all, children bond with dolls and action figures. For a robot itself to be capable of making moral judgments seems a more distant goal. Can machines ever be constructed that possess a conscience, arguably the most uniquely human of human attributes?

An ethical sense would be most immediately useful in situations where human morals are continually put to the test—a battlefield, for example. Robots are being prepared for increasingly sophisticated roles in combat, in the form of remotely operated drones and ground-based vehicles mounted with machine guns and grenades. Various governments are developing models that one day may be able to decide on their own when—and at whom—to fire. It's hard to imagine holding a robot accountable for the consequences of making the wrong decision. But we would certainly want it to be equipped to make the right one.

The researcher who has gone the furthest in designing ethical robots is Ronald Arkin of the Georgia Institute of Technology in Atlanta. Arkin says it isn't the ethical limitations of robots in battle that inspire his work but the ethical limitations of human beings. He cites two incidents in Iraq, one in which U.S. helicopter pilots allegedly finished off wounded combatants, and another in which ambushed marines in the city of Haditha killed civilians. Influenced perhaps by fear or anger, the marines may have "shot first and asked questions later, and women and children died as a result," he says.

In the tumult of battle, robots wouldn't be affected by volatile emotions. Consequently they'd be less likely to make mistakes under fire, Arkin believes, and less likely to strike at noncombatants. In short, they might make better ethical decisions than people.

In Arkin's system a robot trying to determine whether or not to fire would be guided by an "ethical governor" built into its software. When a robot locked onto a target, the governor would check a set of preprogrammed constraints based on the rules of engagement and the laws of war. An enemy tank in a large field, for instance, would quite likely get the go-ahead; a funeral at a cemetery attended by armed enemy combatants would be off-limits as a violation of the rules of engagement.

A second component, an "ethical adapter," would restrict the robot's weapons choices. If a too powerful weapon would cause unintended harm—say a missile might destroy an apartment building in addition to the tank—the ordnance would be off-limits until the system was adjusted. This is akin to a robotic model of guilt, Arkin says. Finally, he leaves room for human judgment through a "responsibility adviser," a component that allows a person to override the conservatively programmed ethical governor if he or she decides the robot is too hesitant or is overreaching its authority. The system is not ready for real-world use, Arkin admits, but something he's working on "to get the military looking at the ethical implications. And to get the international community to think about the issue."

Back at Carnegie Mellon it's the final week of the spring semester, and I have returned to watch the Yume Project team unveil its transformed android to the Entertainment Technology Center's faculty. It's been a bumpy ride from realism to believability. Yan Lin, the team's computer programmer, has devised a user-friendly software interface to more fluidly control Yume's motions. But an attempt to endow the fembot with the ability to detect faces and make more realistic eye contact has been only half successful. First her eyes latch onto mine, then her head swings around in a mechanical two-step. To help obscure her herky-jerky movements and rickety eye contact, the team has imagined a character for Yume that would be inclined to act that way, with a costume to match—a young girl, according to the project's blog, "slightly goth, slightly punk, all about getting your attention from across the room."

That she certainly does. But in spite of her hip outfit—including the long fingerless gloves designed to hide her zombie-stiff hands and the dark lipstick that covers up her inability to ever quite close her mouth—underneath, she's the same old Actroid-DER. At least now she knows her place. The team has learned the power of lowering expectations and given Yume a new spiel.

"I'm not human!" she confesses. "I'll never be exactly like you. That isn't so bad. Actually, I like being an android." Impressed with her progress, the faculty gives the Yume team an A.

The next month technicians from the Kokoro Company come to pack Actroid-DER for shipment back to Tokyo. Christine Barnes, who'd unsuccessfully lobbied to keep the android at the Entertainment Technology Center, offers to cradle its lolling head as they maneuver it into a crate. The men politely decline. They unceremoniously seal Yume up, still wearing her funky costume.

Nincsenek megjegyzések:

Megjegyzés küldése